kcp: Kubernetes-like control plane

Disclaimer: The paradigm of Cloud Native Simplified is explained here. This article doesn’t aim to explain everything about kcp in detail. We are going to talk about it in only 101 level.

Intro

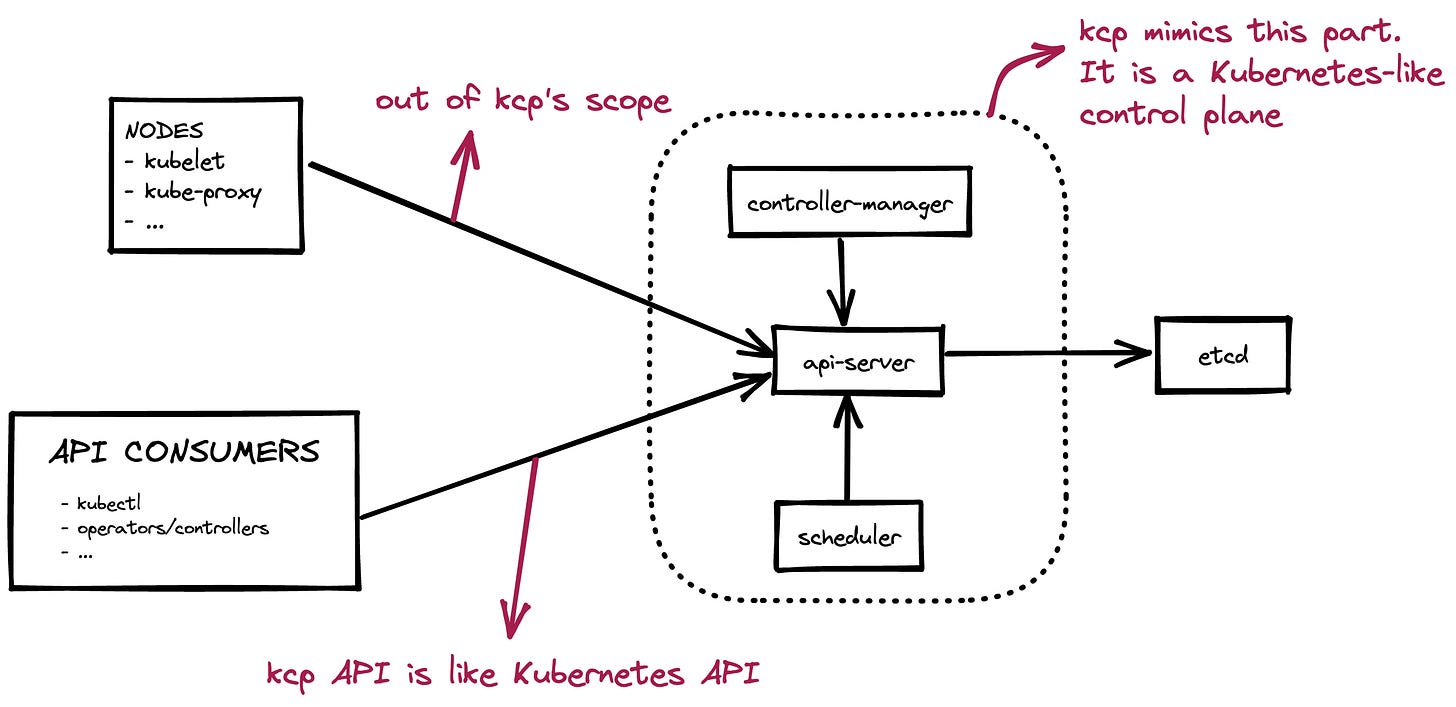

kcp is an open-source project that tries to solve some problems of Kubernetes when you have multi-tenants and/or multi-clusters. Before jumping into the problems, let’s take a high-level look at the architecture.

Simplified Architecture

kcp serves an API that is compatible with the Kubernetes API. You can run your kubectl commands against that API.

kcp also contains some controllers and schedulers. That is why the project calls itself “Kubernetes-like control plane” instead of “Kubernetes-like api-server”.

Now the core functionality is provided by only one binary (that also starts an etcd if necessary) but it may be decoupled in time. There are some other helper components in the project for some use cases.

The API

kcp API provides basic Kubernetes types like ConfigMap, Secret, RBAC types, etc.

kcp API doesn’t provide the types for container orchestration like Node, Pod, Deployment, etc. by default.

kcp support CRDs.

kcp API provides additional built-in types to solve some problems. Multi-tenancy and multi-clusters are the major problems.

Problem 1: Multi-tenancy

Everybody knows multi-tenancy is hard in Kubernetes.

For standard tenants who run standard workloads like Deployments, it is not easy but doable. You need to use RBAC objects, Namespaces, NetworkPolicies, ResourceQuotas, PodSecurityPolicies, etc to limit&isolate the tenants.

When it comes to advanced tenants who want to run their own operators with their own CRDs, it is huge pain. Kubernetes has no mechanism to isolate tenants for CRDs. Many people use a separate cluster per tenant in this case.

Solution 1: Workspaces

kcp implements multi-tenancy through Workspaces.

Workspaces are like namespaces but provide full isolation. It is like a create a new fresh cluster when you create a workspace and switch your kubeconfig file.

kcp serves a logical cluster per workspace behind the scene. It differentiates workspaces with subdirectories in the api-server URL.

With kubectl-ws plugin, it is easy to create&use workspaces in kcp.

Here is a short demo to show workspace creation and the isolation between workspaces.

➜ kubectl ws current

Current workspace is "root".

➜ kubectl apply -f ./cert-manager.crds.yaml

➜ kubectl get crd

NAME CREATED AT

certificaterequests.cert-manager.io 2022-11-03T18:52:52Z

certificates.cert-manager.io 2022-11-03T18:52:52Z

challenges.acme.cert-manager.io 2022-11-03T18:52:52Z

clusterissuers.cert-manager.io 2022-11-03T18:52:52Z

issuers.cert-manager.io 2022-11-03T18:52:52Z

orders.acme.cert-manager.io 2022-11-03T18:52:52Z

➜ kubectl ws create blog-example

Workspace "blog-example" (type root:organization) created.

➜ kubectl get ws

NAME URL

blog-example https://192.168.0.18:6443/clusters/root:blog-example

users https://192.168.0.18:6443/clusters/root:users

➜ kubectl ws use blog-example

Current workspace is "root:blog-example" (type "root:organization").

➜ kubectl get crd

No resources found

➜ kubectl ws ..

Current workspace is "root".

➜ kubectl get crd

NAME CREATED AT

certificaterequests.cert-manager.io 2022-11-03T18:52:52Z

certificates.cert-manager.io 2022-11-03T18:52:52Z

challenges.acme.cert-manager.io 2022-11-03T18:52:52Z

clusterissuers.cert-manager.io 2022-11-03T18:52:52Z

issuers.cert-manager.io 2022-11-03T18:52:52Z

orders.acme.cert-manager.io 2022-11-03T18:52:52ZProblem 2: Multi-clusters

People need to create multiple clusters instead of one big cluster because of some reasons such as isolation, k8s limits, multi-region/multi-cloud setups, etc. SIG Multicluster is one of the special interest groups in Kubernetes community and they are trying to build some solutions for this problem like KubeFed. However, it is still huge pain for users when they try to use multiple clusters as a single compute service.

kcp has some solutions for the multi-cluster problem.

Solution 2: Syncer and Scheduler

SyncTarget is a Custom Resource of kcp. Each sync target points out a real physical Kubernetes cluster.

Location is a Custom Resource of kcp. Thanks to this CR, admins can group SyncTargets (which means physical clusters) and serve them to end users as a unit.

In each physical cluster, there is a syncer. It is responsible to watch kcp, to manage resources in the physical cluster and to report their status back to kcp.

Placement is a Custom Resource of kcp. Thanks to this CR, end users can state desired workspace/workload - location assignments. Physical clusters and SyncTargets are invisible to end users at this point.

apiVersion: scheduling.kcp.dev/v1alpha1 kind: Placement metadata: name: aws spec: locationSelectors: - matchLabels: cloud: aws namespaceSelector: matchLabels: app: foo locationWorkspace: root:default:location-wsIn kcp, there are some internal schedulers that assign&distribute workloads to the real physical clusters. The ultimate goal is to be able to use multiple clusters as just compute resources like there is a single big cluster.

kcp supports advanced deployment strategies for scenarios such as affinity/anti-affinity, geographic replication, cross-cloud replication, etc.